Ok, you've lost me a bit here on the context of the discussion (not on the context of the science - I have a physics degree.)

Sure, we're way OT, but we got here by the usual organic thread drift:

- OP asked about P45+ lifetime

- Graham commented on lifetime effects of sensor and LCD aging by radiation exposure

- I agreed with Graham, but added that HST sensors last well despite intense radiation bombardment

- Erik commented that HST must be a great long telephoto

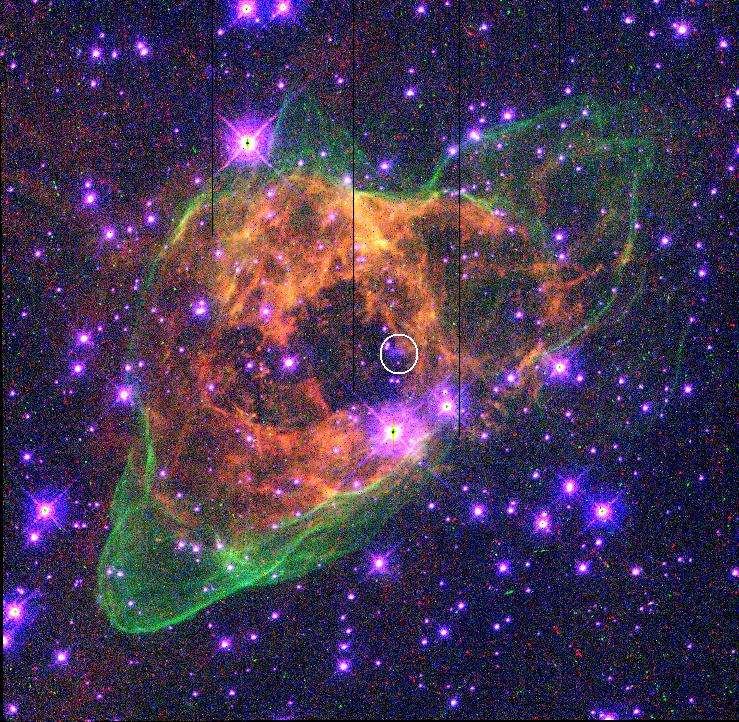

- I agreed, and showed an example from my own HST work...which reminded me of a time when someone who should have known better criticized NASA for using such large f/numbers, on the basis of the spectre of diffraction.

- and so here we are, talking about space telescope point-spread functions and diffraction, when we should be talking about the lifetime of a particular digital back.

You seemed to be inferring earlier with your sarcastic "mustn't shoot at such small f-stops" comment that those who consider such things are somehow missing the bigger picture.

No, I was just joking about the tendency among some "experts" to decry anything slower than about f/16 as a no-go area because of diffraction - Synn put it well, "the diffraction police". As I said, I was involved in a discussion, long time ago, where the HST's large f-numbers needlessly raised eyebrows. They weren't missing the bigger picture - they were missing the context that detail, angular resolution, is determined by physical aperture and not by focal ratio, and that there are two ways to arrive at a slower focal ratio: take a fast lens and stop it down; or take a fast lens and optically amplify its focal length. The first way decreases the resolution that the system is capable of, and that's the only way they were thinking of; but the second way preserves the angular resolution (and the photon collecting area), and that's what happens with telescopes.

Regardless of "chunky pixels", there is no arguing whatsoever about the simple fact that because that image you are sharing was shot at such a small aperture, you are losing a huge quantity of information because of diffraction problems.

When you say "that image you are sharing was shot at such a small aperture", you are falling into the same misconception as the folks I was describing above - thinking with the photographer part of your brain rather than the physicist part. It wasn't shot at a

small aperture - not unless you regard 2.4 metres as small. It was shot at a

slow f/ratio, but that's a different thing. They could have designed the HST as a much faster optical system, while maintaining the 2.4m entrance pupil aperture constraint...it would resolve neither more nor less detail; it would just require smaller pixels to maintain reasonable PSF sampling, and smaller pixels would be quicker to saturate and generally have proportionally higher readout noise. So, they made it slow, and used large-pixel (15 micron) CCDs.

I'm not referring to the cross here - I'm referring to the fact that you have point sources of light that, due to diffraction issues that are exacerbated by the size of the chosen aperture, are masking data in the image.

Look at any of the "bright" stars in that image. Due to diffraction, they are destroying data that would (theoretically) otherwise be available.

You are absolutely correct that there is theoretically more data/resolution to be had. But it would take a larger diameter telescope to obtain it - and if you were to say, increase the aperture 2x, then the optical surface areas to be meticulously figured increase 4x, the mass and volume increase 8x, the launcher capacity and payload requirements increase by a similar amount, the construction and testing budgets increase probably 10x, and everything takes longer, so launch is delayed by years...and then at the end of it all, you find that even when you jump up and down on it, it doesn't fit in the Space Shuttle's payload bay :facesmack: This makes the phrase "exacerbated by the size of the chosen aperture" inappropriate in this context - it implies that there was an easy choice available to use a larger aperture.

Isn't that the reason why photography "gurus" take diffraction into consideration?

It is the reason, but I hope I've shown why it's wrong to apply photographic diffraction considerations to all imaging contexts.

Cheers,

Ray